Two of the greatest strengths of the human mind in marketing:

- Our ability to detect patterns from imperfect information.

- Our ability to tell stories that resonate and spread.

Ironically, these are also two of our biggest weaknesses in data-driven marketing

I’m presenting today at DemandCon on a panel about effective marketing management with data. The official title of the session is “measuring marketing impact.” Yet while I am firm believer in the power of data in modern marketing, I am cautious of the many ways in which data can be abused.

So I may be playing the foil on today’s panel.

Most of what I’ll be discussing is covered in two posts that I wrote last month:

- 14 rules for data-driven, not data-deluded, marketing

- Strategic data vs. data theater in data-driven marketing

But I have two new images that I’ll be sharing with the audience to illustrate the points at the top of this post.

Patterns are everywhere, but dead men don’t wear plaid

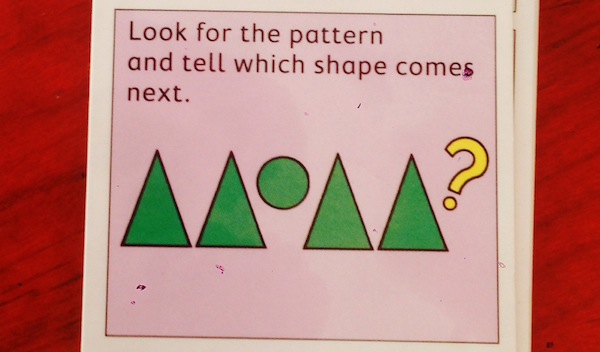

First, there’s this one, which came from a set of my daughter’s pre-school flip cards:

What shape comes next? It’s almost reflexive: circle.

Our brains are wired to naturally detect these inductive patterns. It’s a feature. But it’s also a bug. Because, when you think about it, that’s an incredibly small sample of data that we’re looking at. How can we conclude the answer is “circle” so swiftly and confidently?

How do we not know that the pattern is one triangle, one circle, two triangles, one circle, three triangles, one circle, etc. That’s certainly a possibility. And one that, if we saw a larger sample of the data, we’d be able to instantly conclude that the next objects would be four triangles followed by one circle, and so on.

Yet we can invent many other patterns too — an infinite number, actually.

We also have to consider noise. What if the sample we saw had some errors, misreadings? Someone recorded a triangle where there should have been a circle, or accidentally drew an hexagon instead of a circle. How robust would our pattern detection be to such errors? The answer: it depends. It depends on the pattern, the noise, and frankly, whether there really is a pattern, or just something that coincidentally looks like one.

It’s a powerful gift we have to detect patterns in data. But we can deceive ourselves if we simply take patterns at face value. The pragmatic marketing thing to “detect but verify.”

We can seek to triangulate the pattern with other sources of data. Or, better yet, we can run experiments to test our hypotheses. While we can never get a perfect guarantee, we can use such techniques to dramatically increase the likelihood that the pattern we’ve identified is real (and meaningful).

All data-driven marketers are storytellers

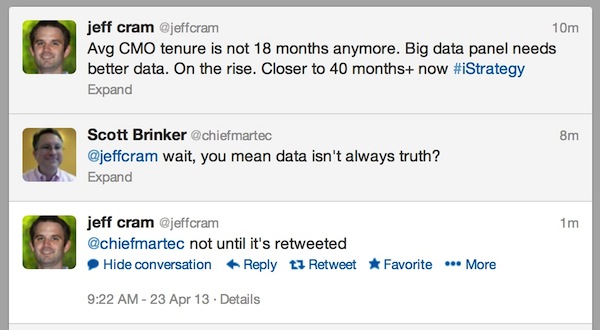

The second image is this exchange I had with Jeff Cram a few months ago on Twitter:

Jeff was admonishing a big data panel on their use of outdated data to make an important point. More recent data actually contradicted their argument. As Robert Plant said, that’s “pumping irony.” So I made a wisecrack about data isn’t truth. Jeff quipped back, “Not until it’s retweeted.”

And that’s the problem in a nutshell.

Marketers, perhaps more than anyone, are excellent storytellers. Seth Godin has eloquently made that point. As it turns out, we’re also highly susceptible to good stories too.

So we can take an anecdote of data that we hear once — perhaps from some survey that someone ran years ago, with who-knows-what kind of methodology, that produced a tantalizing soundbite that has bounced around the web with various interpretations and embellishments — and accept it as gospel. We can then weave it into our own sermon. We believe it. And with enough passion, we can persuade others to believe it.

But that doesn’t necessarily make it true.

At least not true in the present, at a different time, under different circumstances.

Don’t get me wrong. Raw data is boring as hell. To leverage data to accomplish good marketing, we have to put it in the context of a meaningful story. But the more scientifically accurate word should be “hypothesis,” not story.

As with our prone-to-misfiring pattern matching talents, we should listen to but verify such stories — even our own. If it’s a story that we’re going to hang any important initiative on (or our reputation as an authority on a conference panel), it is worth verifying that we have the latest and most relevant source.

Where feasible, the best thing we can do — again — is to run an experiment to verify that hypothesis in the native context where we expect to apply it. The power of experimentation to separate data myths from data reality is the key reason why I continually advocate for big testing over big data.

In our digital age, perhaps the biggest mistake marketing can make is to ignore the data. But the second biggest mistake may be to trust the data too much.

Get chiefmartec in your inbox

Join 42,000+ marketers and martech professionals who get my latest insights and analysis.