How formal should your marketing experimentation programs be?

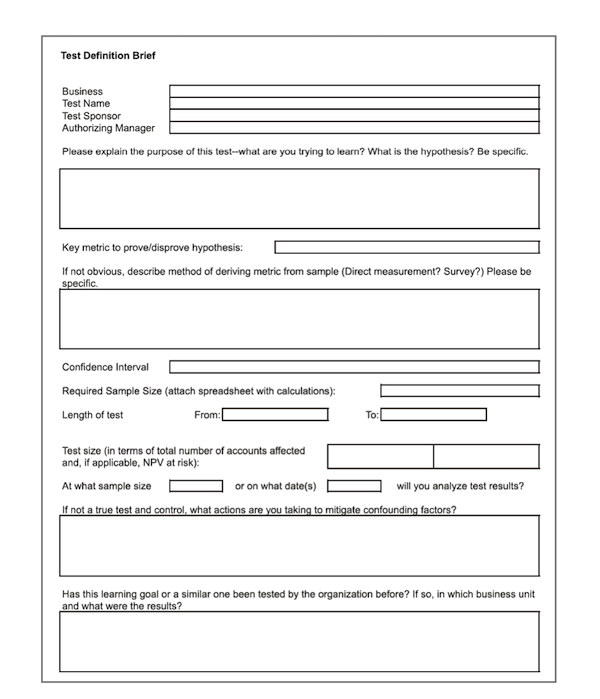

That was the question I was asking myself looking at the above “test definition brief” that was included in a report jointly produced by Google and the Marketing Leadership Council: The Digital Evolution in B2B Marketing.

The report — which I think is terrific overall for a behind-the-scenes look at how several larger enterprises are integrating digital marketing more holistically into their organizations — raises the concern that marketing experiments need to be better structured to make their results more reliable.

“Many B2B companies apply experiments in extremely narrow circumstances (e.g., testing subject line effectiveness for e-mail campaigns),” they write, “but are less active in tackling the bigger questions related to multichannel interactivity and performance in a rigorous way.”

They caution that experiments that are that are executed without a deeper consideration of confounding factors and actionability of findings “may not convincingly support or disprove the debated hypothesis or provide a sufficient basis for decision-making.”

Their recommendation is to establish a more documented and disciplined process for conducting marketing experiments — such as requiring marketers to fill out the above brief to justify any proposed experiment. They don’t say it in the report, but presumedly these briefs would be reviewed and approved by some marketing experimentation governance group, which would enforce proper test design and adjudicate conflicting experiments.

Marketing experiment police or marketing experiment coaches?

However, I found myself somewhat conflicted about their recommendations.

On the plus side, it’s wonderful that controlled experiments are being given top-level attention in enterprise marketing. “Structured experiments are the best way to resolve competing hypotheses on how digital tactics impact customers,” they state in a bolded font. Amen. This is one of the reasons I believe big testing will be bigger than big data.

Therefore, I agree that it’s important for marketers to learn how to run good experiments — to think about an explicit hypothesis, to clarify the metric of success, to weigh confounding factors.

I also agree that when marketers are running big tests — tests that will effect their brand or their business model in significant ways — it’s well-worth having more formal governance over the process. The same applies when running tests that must be sequenced and prioritized, such as experiments on a large company’s primary web site.

And I’m in favor of keeping a record of experiments, particularly the bigger ones, so that lessons learned — in process and in outcome — can be shared. Such a record also helps organizations measure how their experimentation process is evolving too. More on that in a bit.

On the minus side, however, I’m concerned that too much formality — strictly enforced in all scenarios — could dramatically constrain the growth of grassroots marketing experimentation. Although the report somewhat denigrates testing subject lines in email, my experience is that even that low-hanging fruit, such as A/B testing in email marketing, paid search marketing, and landing pages for the web and mobile devices, sits around unplucked in far too many instances.

That’s a shame, because there’s tremendous value to be unlocked there. Even if the gains from those tactical-level tests apply to a more narrow audience for a more narrow objective for any one experiment, collectively, hundreds or thousands of such experiments can deliver an enormous aggregate boost to marketing performance. 20% lift in this campaign, 60% lift in the next, and so, adds up.

I’d also note that exploring many niche experiments at a tactical level can be fertile ground for seeding ideas for larger-scale experiments. You can think of low-level tactical marketing experimentation as a proving ground (er, testing ground) for bigger innovations.

And there’s upside to the narrowness of tactical testing too: because each individual test at that level is so narrow, the risk with any one such experiment is also proportionately narrow.

But if the process overhead to launch a test exceeds the perceived value to be gained from that test — e.g., it’s not worth going through the Grand Testing Committee just to try two different email subject lines — then the enthusiasm for such front-line experimentation will dry up. That will make those organizations poorer: not only because they’ll miss out on the performance gains and ideation benefits of tactical-level testing, but also because they won’t be nurturing as broad of a culture of testing.

It’s from the grassroots that the next generation of leaders will emerge. Don’t we want them growing up as experienced, data-driven marketers who embrace the scientific method of experimentation to push forward new innovation and performance goals?

So do you want marketing experiment police or marketing experiment coaches?

The answer is “it depends.”

Not all marketing experiments are created equal

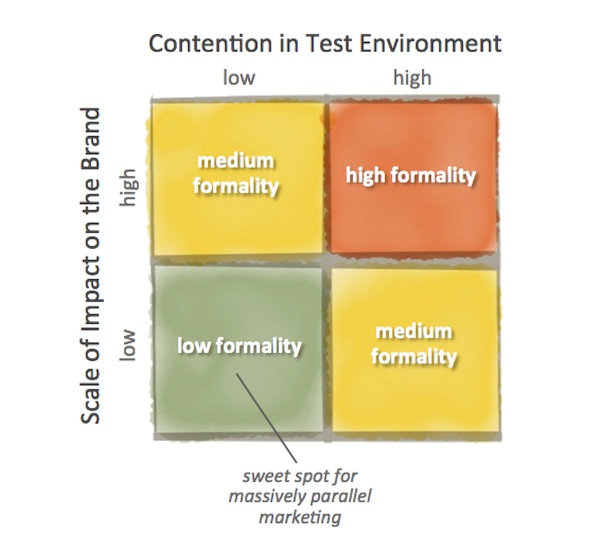

It depends on how big of an impact the experiment may have on the brand, and it depends on how much “contention” there is for multiple experiments that want to test in the same context.

With experiments that are going to have a big impact on the brand and are run in a particularly contentious context — for instance, the design and messaging of the home page of a company’s primary web site — you probably want a high degree of formality to your tests. It’s more important that you get those experiments right, in the sense of what they’re testing and how they’re testing it. It’s less important how many different tests you run.

On the other end of the spectrum though, for experiments that have narrow impact and are relatively independent of other marketing efforts — an email subject line is the quintessential example — I believe the organization benefits from much lower formality.

You still want tests to be run well, so training and best practices are helpful. But the quantity of experiments being run here has significant value. Again, it’s only through the aggregate of these narrow tests that you move the needle for the organization as a whole — both in performance and culture. And the more ideas you try, the more you increase your odds of finding a real diamond of innovation that you’ll want to scale up.

The ideal of this is something that I call massively parallel marketing (click through to read an article on that in Search Engine Land, where I illustrate an example in the context of paid search marketing experimentation).

The matrix above illustrates the not-all-experiments-are-equal principle, with “low formality” and “high formality” as the two ends of the spectrum, while acknowledging that there will likely be cases that fall in the middle that deserve “medium formality.” For instance, a smaller impact website experiment — say, testing different labels for a global navigation choice — has low impact on the brand, but it’s happening in an environment that must be tightly controlled. You should only have one global website navigation experiment running at one time.

High formality scenarios need marketing experimentation police. Low formality scenarios, frankly, need more marketing experimentation coaches — encourage more people to run more tests in this quadrant of the matrix.

Metrics for marketing experimentation in your organization

That leads to an important question: how do you gauge if your marketing experimentation programs are going well?

The obvious metric is performance. For the different experiments you ran, what was the lift in the corresponding KPIs achieved? Can you quantify those improvements in terms of additional leads generated or additional revenue transacted?

But such a metric only measures the experiments you actually ran — it doesn’t capture any of the opportunity cost of experiments that you potentially could have run. So an organization that runs a small number of experiments with good success can celebrate those results, but what indicators will encourage them to do even better?

I’d propose that marketing teams should also examine these meta-metrics about their marketing experimentation process:

- What percentage of the marketing department staff actively engage in experimentation?

- What’s the average number of experiments run per marketing staff member?

- What’s the average amount of time to take an experiment from concept to launch?

- What’s the average number of people involved — in any capacity — for the lifecycle of an experiment?

These metrics hone in on the scale, velocity, and overhead of your marketing experimentation capabilities. And it probably makes sense to segment them according to the different quadrants of the experimentation matrix — different ratios for low formality vs. high formality experiments should be expected.

Since “low formality” and “high formality” are rather subjective labels, these metrics help you tune in on the optimal structure for your organization. Of course, you’ll want to do so in concert with maintaining good performance metrics, so you don’t find yourself weighting quantity at the expense of quality.

Ultimately the answer to the question — how formal should your marketing experiments be — is: as formal (or informal) as they need to be in context to deliver results.

Pingback: Agile Marketing Is The Perfect Management Framework For Big Testing