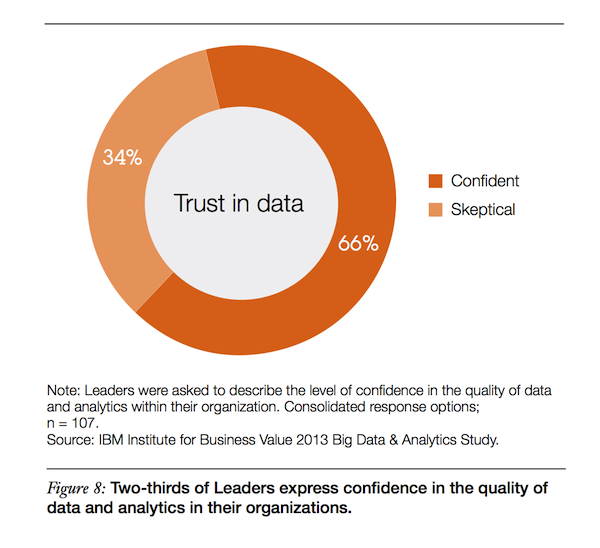

There’s a great new report out by the IBM Institute for Business Value, Analytics: A blueprint for value, that’s well worth reading. The above graphic — stating that 66% of leaders from their research are “confident” in their data and “trust” it — is excerpted from it.

However, this post is not about that report (well, not really).

Let me first disclaim that the context of that graph, as best as I can tell, is that leaders are confident about using data in day-to-day decision-making. They trust the quality of the data, where quality is a function of timeliness and accuracy within a set of enterprise-class data standards. They have faith in the capabilities of their analytics teams and technologies.

And that’s all good.

But the choice of labels on that graph — admittedly, taken out of context — triggered an important tangent that I think is worth discussing. It’s good to be confident in embracing data-driven management, but great data-driven managers should always be skeptical of the data.

That isn’t a contradiction, even if it sounds like one.

A tale of two strategies and the data that stood between them

Let me start with a real-world example, which a director of marketing at a high-tech company recently shared with me (with some identifiable details changed for anonymity). They sell an IT infrastructure product, but one that is typically evaluated by front-line IT managers rather than the CIO. However, as part of their growth strategy, they sought the CIO’s attention to sell broader solutions.

As one way to accomplish that, the director proposed making changes and additions to their website to address CIOs more directly. Yet he got tremendous pushback from the web marketing team who, referring to their web analytics, insisted that the data showed they shouldn’t do that. The more deep and technical their content was, the more visits and shares it received. Their data-driven conclusion was that they should create more front-line manager content, rather than content for the CIO.

Since the web marketing team was evaluated by metrics such as visits and shares — chalk one up for data-driven incentives — they were reluctant to pursue any initiatives that didn’t linearly extrapolate from their existing strategy. Note the circular nature of this argument:

- We produce web content for front-line IT managers.

- This gets us visits and shares from front-line IT managers.

- Therefore, we should produce more content for front-line IT managers.

This isn’t being data-driven. It’s more like being data-enslaved.

At no point in this debate did anyone doubt the “accuracy” of the data. But that data exists in a context. The director was interested in a different context. He was asking questions in the context of CIOs, but the web team was answering with data in the context of front-line IT managers.

Looking at this from afar, it may seem obvious — there are many options for how to resolve this dispute. But strategic inflection points like this are a natural place for such disconnects to occur. In some ways, this is Clay Christensen’s classic innovator’s dilemma: what worked in the past holds us back from what needs to change in the future. Data from the previous strategic context can further calcify that prior worldview. To break out of the dilemma, you need to consider different data.

It’s not a question about whether the data is right, but whether it’s the right data.

By the way, this story has a happy ending. They set up a different site for content marketing to CIOs — an experiment to see if this marketing director’s hypothesis could be proven — which grew to be very successful. It never received as much absolute traffic as the site that served front-line IT managers — after all, there are many, many more front-line IT managers than CIOs out there. But their pipeline for CIO-level solution sales grew tremendously, as did their brand reputation at the C-suite level.

Being skeptical of the data, not skeptical of using data

Data was supposed to settle arguments, to get us out of situations where people just argued from their gut, and eventually somebody pulled rank (the HiPPO).

Ironically, however, it can have the opposite effect:

- People develop a belief.

- They find data that correlates with that belief.

- They use that data to cement their belief into an unassailable tower.

One need look no further than the rancor of partisan politics in the U.S., and the ways in which opposing nuggets of data are used to cudgel the other side rather than objectively seek the truth, to see this folly in action. The two problems at the heart of this dilemma are:

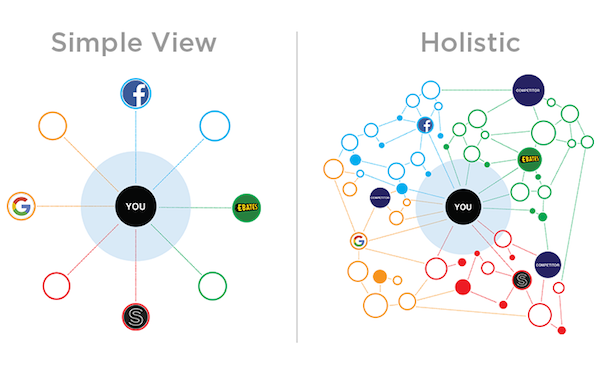

- There’s a nearly unlimited amount of data to choose from out there.

- There’s a nearly unlimited number of narratives one can build around that data.

Once we recognize that, we realize that data doesn’t necessarily settle arguments. Data can tell us what, but it can’t inherently tell us why. To uncover the why, we need a very specific kind of data: data from controlled experiments. Since why is often more important than what for deciding a future course of action, experimentation is a more powerful management tool than data analysis.

This is why I believe that big testing is more important than big data.

Of course, not all of the data we deal with comes from controlled experiments. For that matter, it’s very hard to have a perfectly controlled experiment in the real-world. We have to deal with data that is imperfect in helping us answer why. But imperfect data can be far better than using no data at all.

The caveat of “can be” in that last sentence boils down to using data to make decisions, while simultaneously remaining skeptical of the narrative, implied or explicit, surrounding that data.

You can have confidence in using data while not fully trusting the data. It’s not that you doubt its accuracy. But you’re skeptical of data’s context, relevance, and completeness in the conclusions that are being drawn from it. (Note that skepticism is not cynicism — it’s about questioning for the purpose of progress, not questioning for the purpose of being obstinate.)

I’ve come to believe that the best way to work with data strategically is to adopt a Bayesian worldview, which has been advocated by two of my favorite marketing strategy thinkers, Gord Hotchkiss and Greg Satell.

In Bayesian strategy, it’s not about data being used conclusively, but data being used probabilistically to continuously update a mental model of the market. It’s not about certainty, but relative certainty — with an open mind to data that may alter one’s Bayesian beliefs. You proactively look for signals of change.

“Fact-based decision-making” is a term that’s growing in popularity. And while I laud the intent behind it — data over opinions — I remain cautious that “facts” are a slippery thing, especially when you go from the what to the why. Opinions can be all too easily disguised with data to look like facts.

Good fact-based decision-making reveals itself by being as open to data that contradicts the current direction as it is to data that confirms — or can be interpreted, with some creative narrative, to confirm — the status quo.

P.S. For a shorter version of other heuristics for thinking about data, see my post earlier this year on 14 rules for data-driven, not data-deluded, marketing.

Great post Scott!

I would like to add one point that I think is important. In Bayesian statistics, it’s okay to guess (as a matter of fact, you’re supposed to guess)—which is why Fisher and his followers hated the Bayes so much. In effect, you don’t need to have any data at all to have an idea. It’s okay if you’re idea is crazy, stupid or even just plain wrong.

The point is, as you suggested, to test ideas so that we become less wrong over time.

– Greg

I’ve been in the data business (engineering, financial, marketing) long enough to have seen many a justification created after a decision was made prior to consulting the data. Selecting the correct metric(s) over the right time period with appropriate subsets ignored by applying obligatory assumptions can always be used to create a compelling story.

My solution: First, understand the incentives for specific justifications to be desired. Then, get all the data and probe it from multiple angles to see what it tells me, before passing the implied truth on to those making the decisions.