There is tremendous excitement around data-driven marketing. A whole catalog of data-related phrases are echoing throughout the marketing world: big data, data mining, data science, data exchanges, data management platforms, controlled experiments (“big testing“), analytics, metrics, dashboards, etc.

But the data driving all these different activities isn’t quite the same. Or, more accurately, the contexts in which marketers use that data aren’t the same.

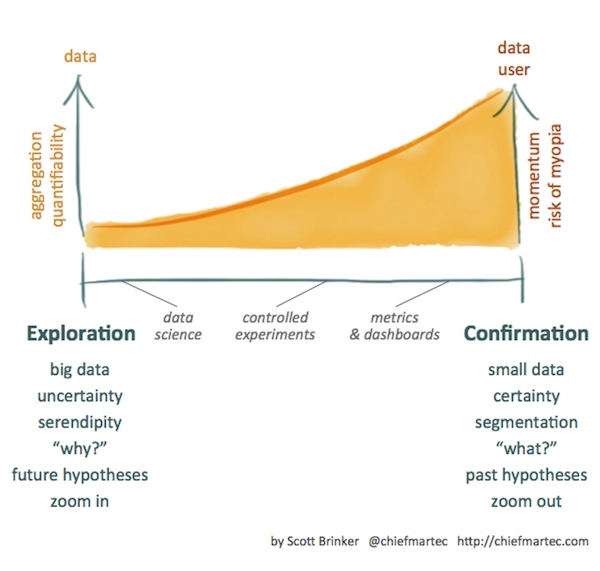

Different data-driven activities change how you should think about data, manage it, and ultimately derive value from it. An important axis is the degree to which marketing data is used in the context of exploration versus confirmation. As illustrated above, this can be pictured as a continuum, with different data activities falling somewhere along that line.

An example of exploration with data would be retail transaction histories of tens of thousands of customers — a typical “big data” set — that a marketer wants to mine for interesting patterns of behavior, perhaps to power targeted campaigns or to predict optimal inventory mixes for different seasons and locations.

The quintessential example of confirmation with data is financial results: this quarter’s revenues and expenses, both in total and broken out by product, location, and time period, compared with previous quarters. Such aggregated and more quantified data is typically “small data” — often represented in a spreadsheet.

Yes, I know, the same atoms of data feed both of these examples, but the context is quite different.

In exploration, the marketer works directly with the massive number of individual data atoms, searching for relationships in an open-ended manner. In confirmation, the marketer looks at that data in a more aggregated form, where the masses of individual data atoms are rolled up according to predetermined and highly structured relationships.

Exploration zooms in on data granularity. Confirmation zooms out to the bigger picture.

Exploration is usually about answering “why?” Why are customers motivated to act (or not act as the case may be)? Confirmation is more about answering “what?” What happened in our efforts to motivate customers to act?

The journey from exploration to confirmation

The exploration side of this continuum is where big data typically lives. Individual data atoms here may be structured (such as a record of an individual purchase transaction) or more unstructured (such as the text from an individual tweet). But to the marketer who’s looking at it in its raw form, this sea of data doesn’t inherently reveal a higher meaning.

This is both a feature and a problem.

It’s a feature because it presents a powerful opportunity for the discovery of new meaning. Assigning meaning to data requires assumptions and choices in how that data is interpreted. The raw data is filtered, transformed, and aggregated according to those choices to winnow that unmanageable sea into something concrete that a human can grasp, use, and understand. This is the process by which data turns into information and knowledge.

Unfortunately, the problem is that those exact same assumptions and choices also shut out a huge number of other possible meanings. The same set of underlying raw data can support a near infinite number of different interpretations. If you have any doubt about that, consider the way politicians use data to support their rhetoric. But when you’re using those interpretations to make business decisions, having the right interpretation can determine success or failure.

So the $1 million question is: how do you know you’ve got the right interpretation?

The good news is that there isn’t necessarily just one right interpretation. As they say, if you’re seeking absolute truth, the philosophy department is down the hall. All that matters in business is that your interpretation is a good one in the sense that it pragmatically helps you achieve your goals. And you can compare one interpretation as “better” than another by the degree to which it improves your results.

Of course, in some scenarios — particularly around finance — the “right” interpretation is dictated to you by rules from your boss, your CFO, GAAP standards, government agencies such as the SEC, etc. But for most marketing activities, the onus is on you to decide which interpretations to use. That’s a source of great power, but a big responsibility.

So how do you decide which interpretations to adopt?

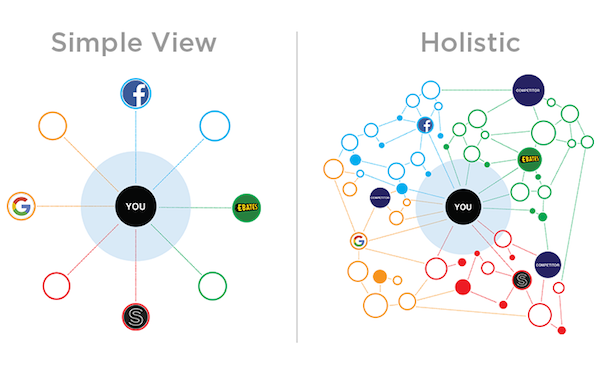

The easy way is to simply go with pre-canned interpretations that are built into your chosen marketing software. When you run a standard report or glance at a fixed dashboard, you’re viewing the associated data through a lens that the software developer thought was a good interpretation. It may be. But this is one of the reasons I caution marketers that you are the software you use — because the built-in interpretations of data that they provide are making choices and assumptions on your behalf.

To branch out further, you may turn to “best practices” for interpretation recommended by independent consultants, peers, and other organizations.

However, no two businesses are exactly alike. In fact, most business strategies are based on achieving meaningful differentiation from competitors and substitutes. Therefore, eventually, you’ll want to develop your own interpretations data that power your unique competitive advantage. This is where data science and data scientists can help.

But the interpretations that data science uncovers are often unproven hypotheses at first. Exploration is rife with uncertainty. To prove that a particular interpretation of data can be productively harnessed to achieve business value usually requires the marketer to run an experiment.

Experiments are the bridge from exploration to confirmation.

Experiments can be explicit or implicit. To be explicit, one runs a controlled experiment, where the marketer executes a split test between two or more different alternatives, while trying to hold other variables constant — as best as possible in the complex and messy environment of real-world marketing. Controlled experiments are incredibly powerful for proving cause-and-effect relationships that impact customer behavior in a highly focused manner. They’re “explicit” because the marketer directly decides almost every aspect of how the experiment is being run.

An example of implicit marketing experimentation is personalization software that uses machine learning techniques to try many different possible ways of leveraging a certain set of data in a customer’s experience to affect their behavior. These experiments are “implicit” in the sense that that the marketer is not directly determining which hypotheses are being tested. (This tends to work best in situations where the hypotheses being tested by the software are low risk, more about discovering serendipity than fulfilling explicit customer intentions.)

Either way, the performance metrics resulting from those experiments provide confirmation of which hypotheses work. Experiments move you from uncertainty to certainty — at least as much certainty as can be determined by outcomes. The movement from exploration to confirmation builds marketing momentum.

Put another way: exploration feeds future hypotheses, while confirmation illuminates the outcomes of past hypotheses.

One person’s aggregation is another person’s atom

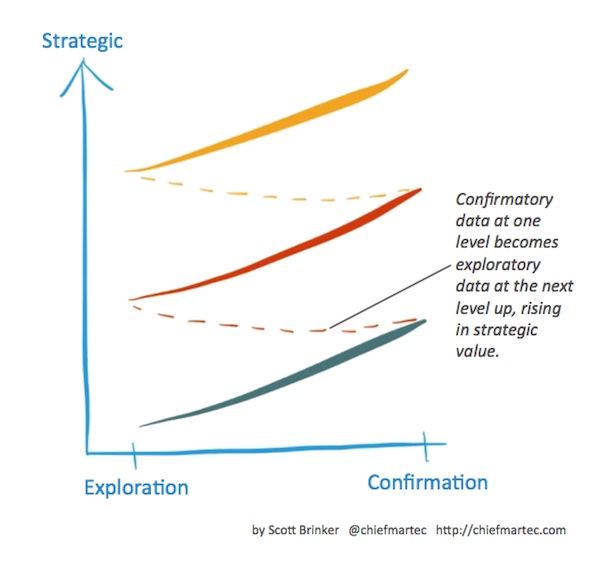

Interestingly, along this exploration-confirmation continuum of data-driven activities, one person’s aggregated confirmation data may become atoms in another person’s sea of data for exploration at the next level up in the organization.

For instance, a front-line web marketer might explore individual data points of thousands of actions taken by thousands of visitors, use that data to form hypotheses for conversion optimization experiments, and confirm success with the increase in aggregate metrics such as lead generation counts or online e-commerce sales.

However, a more senior marketing executive may consider the performance of activities on that particular website as one piece of a multi-channel marketing strategy. That marketer at the next level up would explore performance data from many different channels — where data atoms may be as granular as individual interactions with prospects, but probably not as deep as analyzing the raw sequences of every action of every web user the way that front-line web marketer did.

Data exploration for this senior marketer would drive hypotheses about investments in different channels and how to maximize the synergy between them. Confirmation would be performance data at a much higher level — most likely as a function of financial P&L.

In turn, the business unit of that marketing executive may be one of many business units in a much larger enterprise. The confirmation data from all of these different units may feed into an exploratory process for the enterprise CMO.

The higher you go, the more strategic such data exploration becomes.

But regardless of the level at which you’re operating, the most important thing to remember in data-driven marketing is that exploration is different than confirmation. Confirmation reveals the performance of your different marketing efforts to help drive decisions, but such data is often locked in existing assumptions and interpretations of the underlying atomic data. Exploration unlocks the possibilities of new ideas and innovation, but requires a proof mechanism — such as controlled experiments — to confirm that those interpretations of such data actually work.

They’re not the same, but they’re both important to effective data-driven marketing.

Pingback: The Top 17 Marketing Automation Articles Curated Today, Monday, 2/25/13 « The Marketing Automation Alert

Scott, this is a great article on data-driven marketing. What I like best is how you explain the difference between the way web marketers work with data versus the next management level up versus data and marketing scientists. And, you connect the dots between exploration and confirmation through experimentation. Seems an obvious road map, but one that frequently gets muddled. Thanks for taking the time.

Andrea Hadley

Thanks, Andrea — glad you found it useful!